Robotic Transformer 2 (RT-2) is a novel vision-language-action (VLA) mannequin that learns from each net and robotics knowledge, and interprets this information into generalised directions for robotic management.

Excessive-capacity vision-language fashions (VLMs) are skilled on web-scale datasets, making these methods remarkably good at recognising visible or language patterns and working throughout totally different languages. However for robots to realize an analogous degree of competency, they would want to gather robotic knowledge, first-hand, throughout each object, setting, process, and state of affairs.

In our paperwe introduce Robotic Transformer 2 (RT-2), a novel vision-language-action (VLA) mannequin that learns from each net and robotics knowledge, and interprets this information into generalised directions for robotic management, whereas retaining web-scale capabilities.

This work builds upon Robotic Transformer 1 (RT-1)a mannequin skilled on multi-task demonstrations, which might study mixtures of duties and objects seen within the robotic knowledge. Extra particularly, our work used RT-1 robotic demonstration knowledge that was collected with 13 robots over 17 months in an workplace kitchen setting.

RT-2 exhibits improved generalisation capabilities and semantic and visible understanding past the robotic knowledge it was uncovered to. This consists of decoding new instructions and responding to consumer instructions by performing rudimentary reasoning, akin to reasoning about object classes or high-level descriptions.

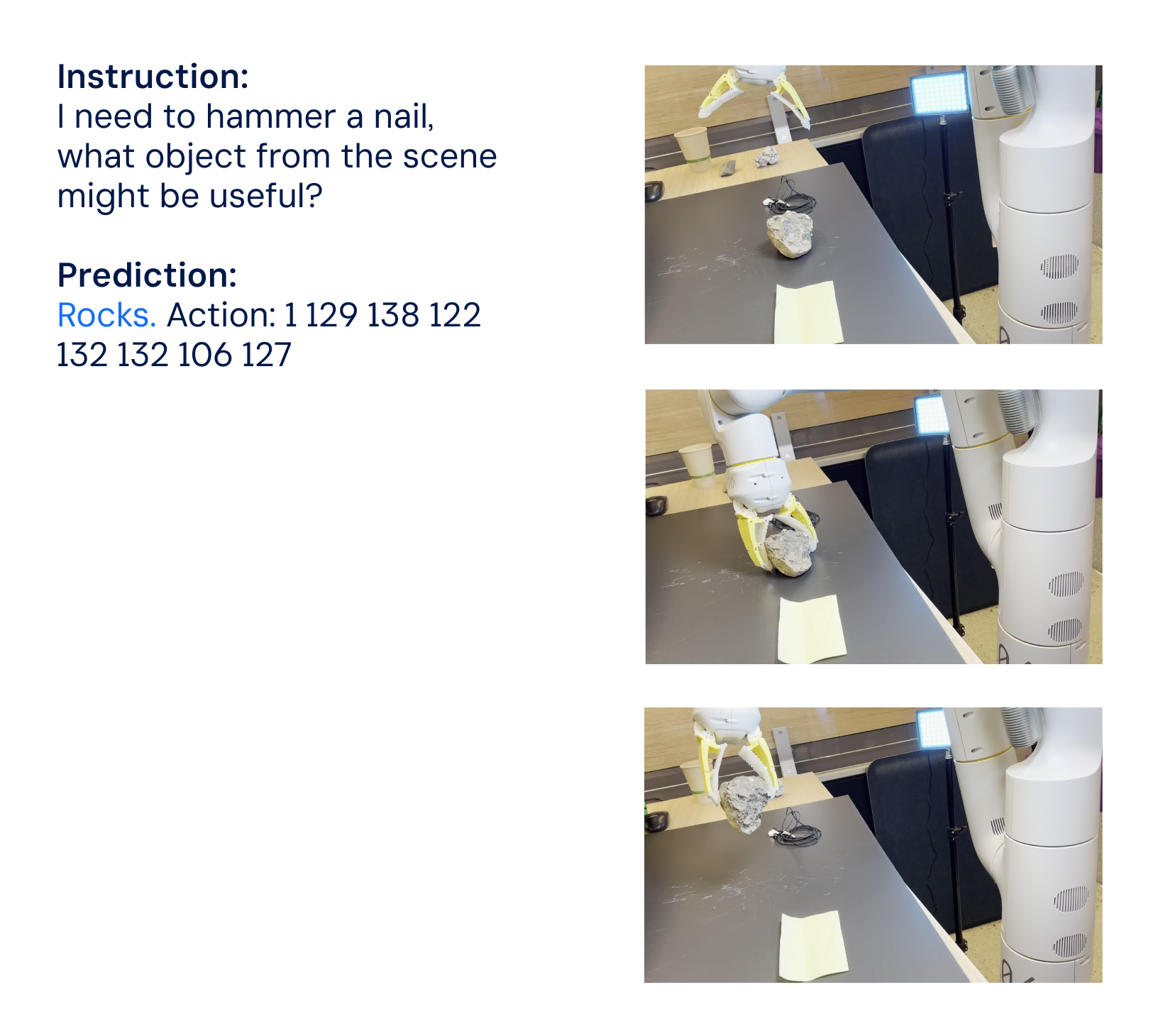

We additionally present that incorporating chain-of-thought reasoning permits RT-2 to carry out multi-stage semantic reasoning, like deciding which object might be used as an improvised hammer (a rock), or which kind of drink is finest for a drained particular person (an vitality drink).

Adapting VLMs for robotic management

RT-2 builds upon VLMs that take a number of pictures as enter, and produces a sequence of tokens that, conventionally, symbolize pure language textual content. Such VLMs have been successfully trained on web-scale knowledge to carry out duties, like visible query answering, picture captioning, or object recognition. In our work, we adapt Pathways Language and Picture mannequin (PaLI-X) and Pathways Language mannequin Embodied (PaLM-E) to behave because the backbones of RT-2.

To regulate a robotic, it have to be skilled to output actions. We deal with this problem by representing actions as tokens within the mannequin’s output – just like language tokens – and describe actions as strings that may be processed by customary natural language tokenizersproven right here:

The string begins with a flag that signifies whether or not to proceed or terminate the present episode, with out executing the following instructions, and follows with the instructions to vary place and rotation of the end-effector, in addition to the specified extension of the robotic gripper.

We use the identical discretised model of robotic actions as in RT-1, and present that changing it to a string illustration makes it attainable to coach VLM fashions on robotic knowledge – because the enter and output areas of such fashions don’t have to be modified.

Generalisation and emergent expertise

We carried out a collection of qualitative and quantitative experiments on our RT-2 fashions, on over 6,000 robotic trials. Exploring RT-2’s emergent capabilities, we first looked for duties that will require combining data from web-scale knowledge and the robotic’s expertise, after which outlined three classes of expertise: image understanding, reasoning, and human recognition.

Every process required understanding visual-semantic ideas and the flexibility to carry out robotic management to function on these ideas. Instructions akin to “pick up the bag about to fall off the table” or “move banana to the sum of two plus one” – the place the robotic is requested to carry out a manipulation process on objects or eventualities by no means seen within the robotic knowledge – required data translated from web-based knowledge to function.

Throughout all classes, we noticed elevated generalisation efficiency (greater than 3x enchancment) in comparison with earlier baselines, akin to earlier RT-1 fashions and fashions like Visible Cortex (VC-1), which have been pre-trained on massive visible datasets.

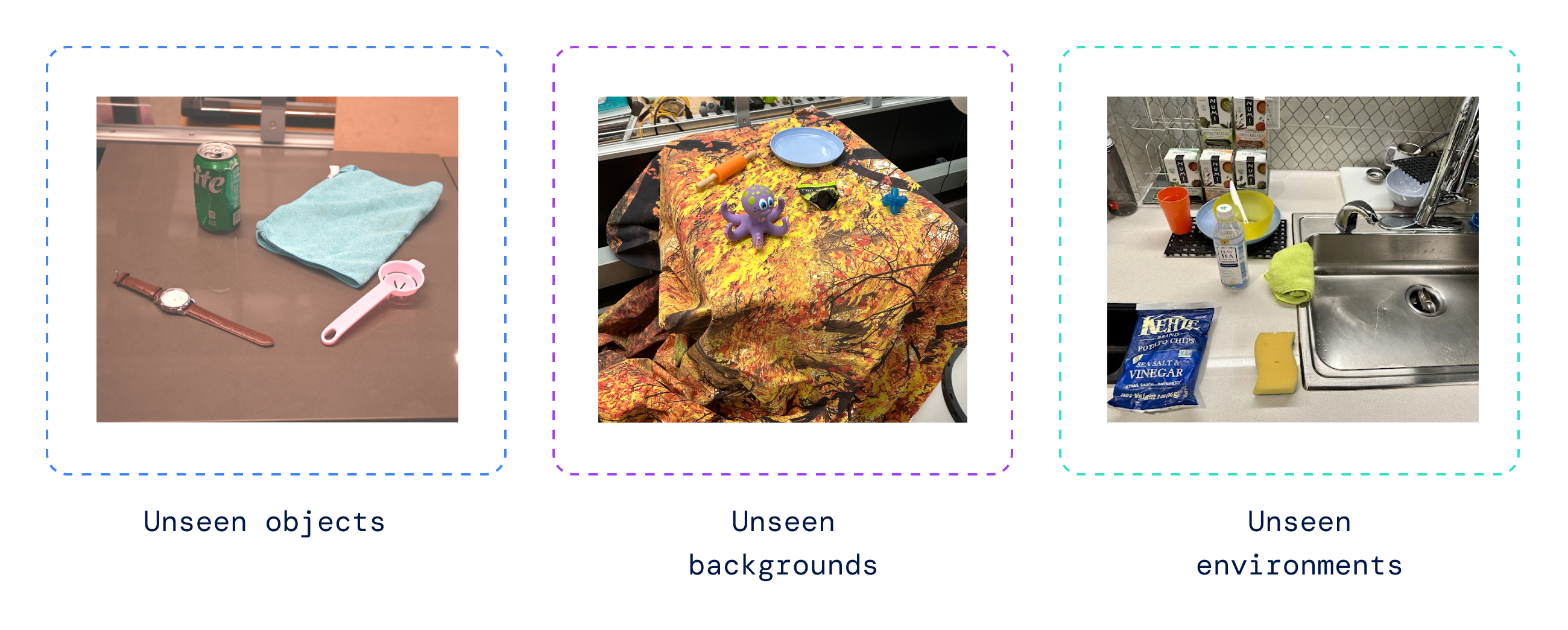

We additionally carried out a collection of quantitative evaluations, starting with the unique RT-1 duties, for which we’ve got examples within the robotic knowledge, and continued with various levels of beforehand unseen objects, backgrounds, and environments by the robotic that required the robotic to study generalisation from VLM pre-training.

RT-2 retained the efficiency on the unique duties seen in robotic knowledge and improved efficiency on beforehand unseen eventualities by the robotic, from RT-1’s 32% to 62%, displaying the appreciable advantage of the large-scale pre-training.

Moreover, we noticed vital enhancements over baselines pre-trained on visual-only duties, akin to VC-1 and Reusable Representations for Robotic Manipulation (R3M), and algorithms that use VLMs for object identification, akin to Manipulation of Open-World Objects (MOO).

Evaluating our mannequin on the open-source Language Table suite of robotic duties, we achieved a hit price of 90% in simulation, considerably enhancing over the earlier baselines together with BC-Z (72%), RT-1 (74%), and LAVA (77%).

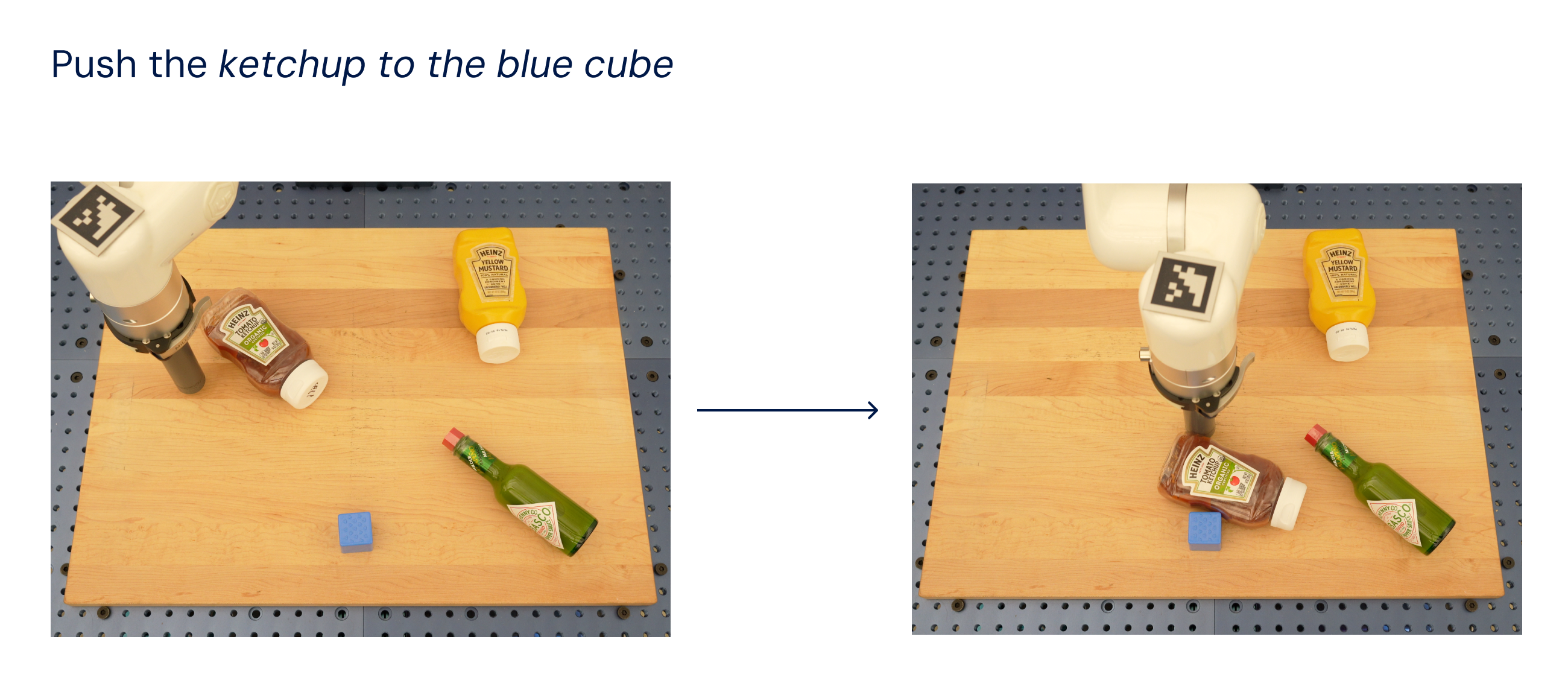

Then we evaluated the identical mannequin in the actual world (because it was skilled on simulation and actual knowledge), and demonstrated its capability to generalise to novel objects, as proven beneath, the place not one of the objects besides the blue dice have been current within the coaching dataset.

Impressed by chain-of-thought prompting methods used in LLMswe probed our fashions to mix robotic management with chain-of-thought reasoning to allow studying long-horizon planning and low-level expertise inside a single mannequin.

Particularly, we fine-tuned a variant of RT-2 for only a few hundred gradient steps to extend its capability to make use of language and actions collectively. Then we augmented the information to incorporate an extra “Plan” step, first describing the aim of the motion that the robotic is about to absorb pure language, adopted by “Action” and the motion tokens. Right here we present an instance of such reasoning and the robotic’s ensuing behaviour:

With this course of, RT-2 can carry out extra concerned instructions that require reasoning about intermediate steps wanted to perform a consumer instruction. Because of its VLM spine, RT-2 can even plan from each picture and textual content instructions, enabling visually grounded planning, whereas present plan-and-act approaches like SayCan can not see the actual world and rely totally on language.

Advancing robotic management

RT-2 exhibits that vision-language fashions (VLMs) could be remodeled into highly effective vision-language-action (VLA) fashions, which might immediately management a robotic by combining VLM pre-training with robotic knowledge.

With two instantiations of VLAs primarily based on PaLM-E and PaLI-X, RT-2 leads to highly-improved robotic insurance policies, and, extra importantly, results in considerably higher generalisation efficiency and emergent capabilities, inherited from web-scale vision-language pre-training.

RT-2 just isn’t solely a easy and efficient modification over current VLM fashions, but additionally exhibits the promise of constructing a general-purpose bodily robotic that may motive, downside resolve, and interpret data for performing a various vary of duties within the real-world.