New, formal definition of company provides clear rules for causal modelling of AI brokers and the incentives they face

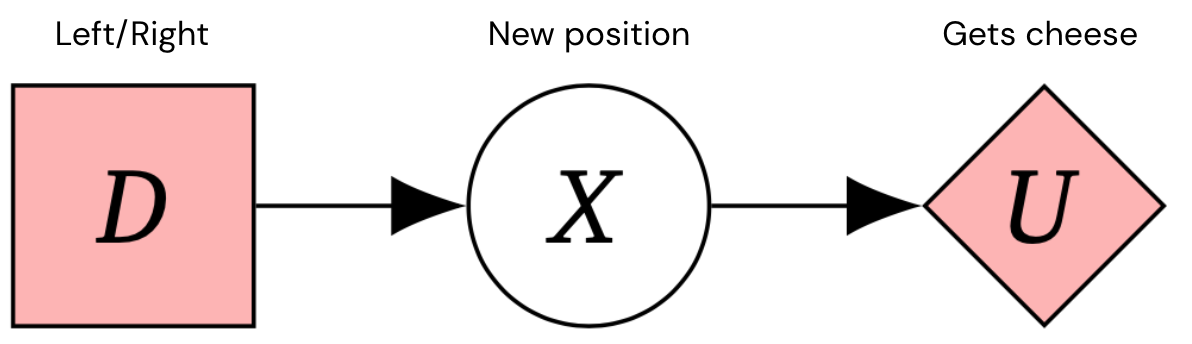

We wish to construct secure, aligned synthetic common intelligence (AGI) methods that pursue the supposed objectives of its designers. Causal influence diagrams (CIDs) are a technique to mannequin decision-making conditions that enable us to purpose about agent incentives. For instance, here’s a CID for a 1-step Markov resolution course of – a typical framework for decision-making issues.

By relating coaching setups to the incentives that form agent behaviour, CIDs assist illuminate potential dangers earlier than coaching an agent and may encourage higher agent designs. However how do we all know when a CID is an correct mannequin of a coaching setup?

Our new paper, Discovering Agentsintroduces new methods of tackling these points, together with:

- The primary formal causal definition of brokers: Brokers are methods that might adapt their coverage if their actions influenced the world another way

- An algorithm for locating brokers from empirical information

- A translation between causal fashions and CIDs

- Resolving earlier confusions from incorrect causal modelling of brokers

Mixed, these outcomes present an additional layer of assurance {that a} modelling mistake hasn’t been made, which implies that CIDs can be utilized to analyse an agent’s incentives and security properties with higher confidence.

Instance: modelling a mouse as an agent

To assist illustrate our methodology, contemplate the next instance consisting of a world containing three squares, with a mouse beginning within the center sq. selecting to go left or proper, attending to its subsequent place after which doubtlessly getting some cheese. The ground is icy, so the mouse would possibly slip. Typically the cheese is on the suitable, however typically on the left.

This may be represented by the next CID:

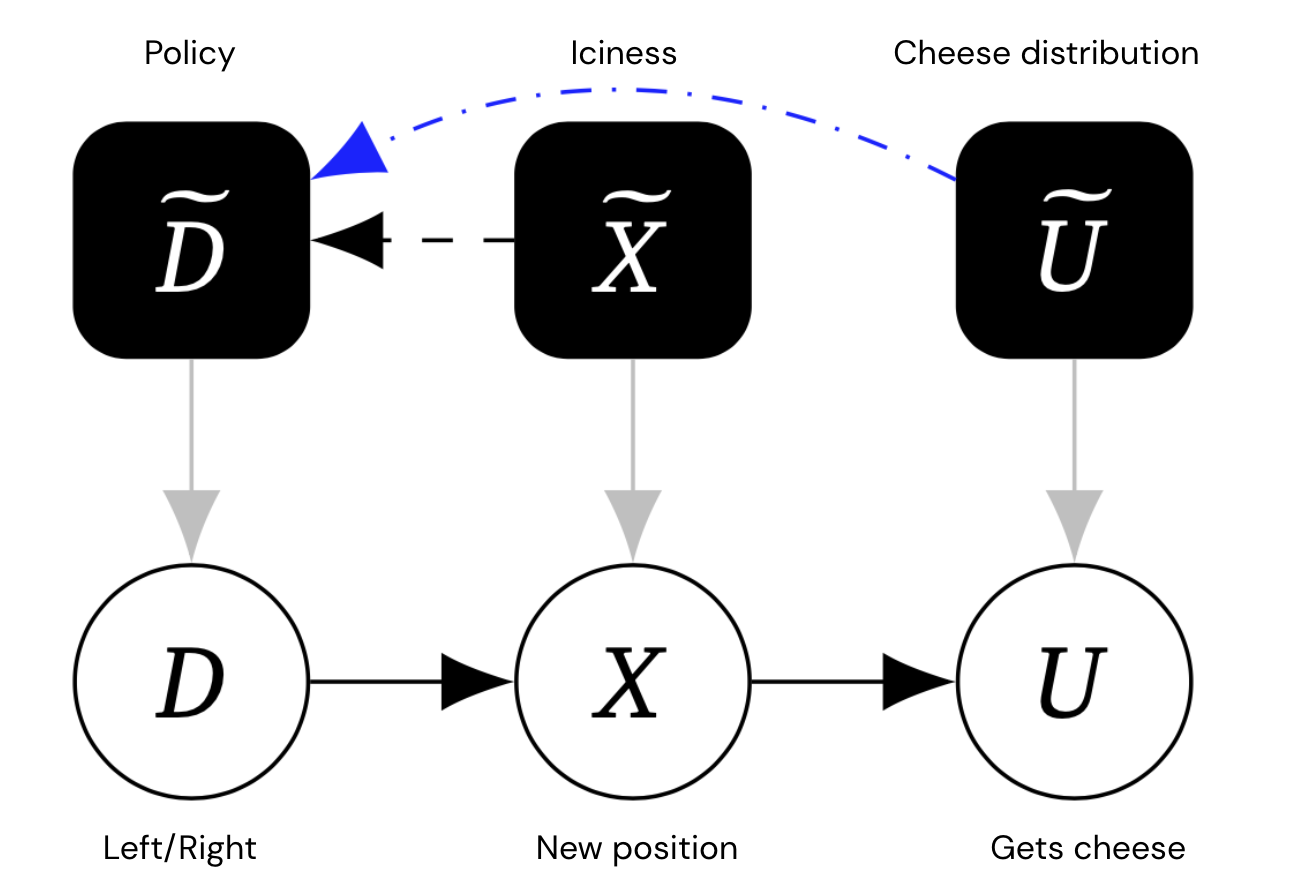

The instinct that the mouse would select a distinct behaviour for various surroundings settings (iciness, cheese distribution) may be captured by a mechanised causal graph, which for every (object-level) variable, additionally features a mechanism variable that governs how the variable is determined by its dad and mom. Crucially, we enable for hyperlinks between mechanism variables.

This graph incorporates further mechanism nodes in black, representing the mouse’s coverage and the iciness and cheese distribution.

Edges between mechanisms symbolize direct causal affect. The blue edges are particular terminal edges – roughly, mechanism edges A~ → B~ that might nonetheless be there, even when the object-level variable A was altered in order that it had no outgoing edges.

Within the instance above, since U has no kids, its mechanism edge have to be terminal. However the mechanism edge X~ → D~ will not be terminal, as a result of if we minimize X off from its youngster U, then the mouse will now not adapt its resolution (as a result of its place gained’t have an effect on whether or not it will get the cheese).

Causal discovery of brokers

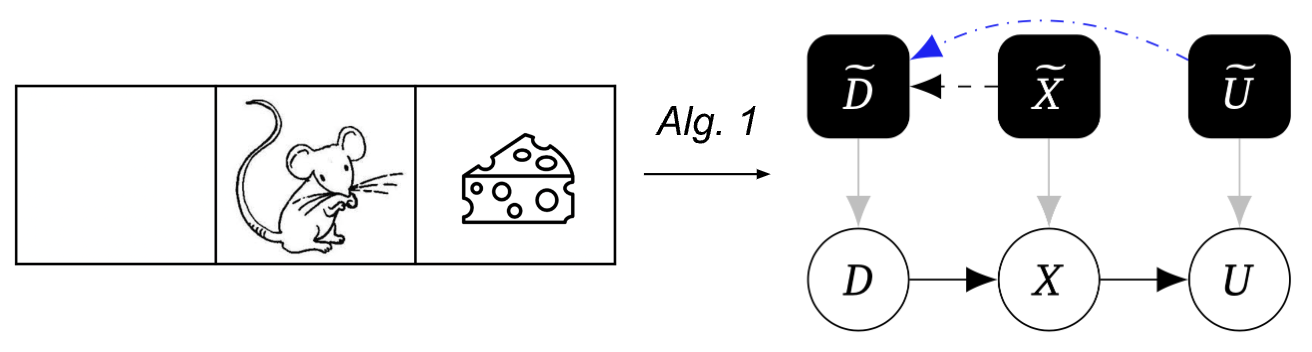

Causal discovery infers a causal graph from experiments involving interventions. Particularly, one can uncover an arrow from a variable A to a variable B by experimentally intervening on A and checking if B responds, even when all different variables are held mounted.

Our first algorithm makes use of this method to find the mechanised causal graph:

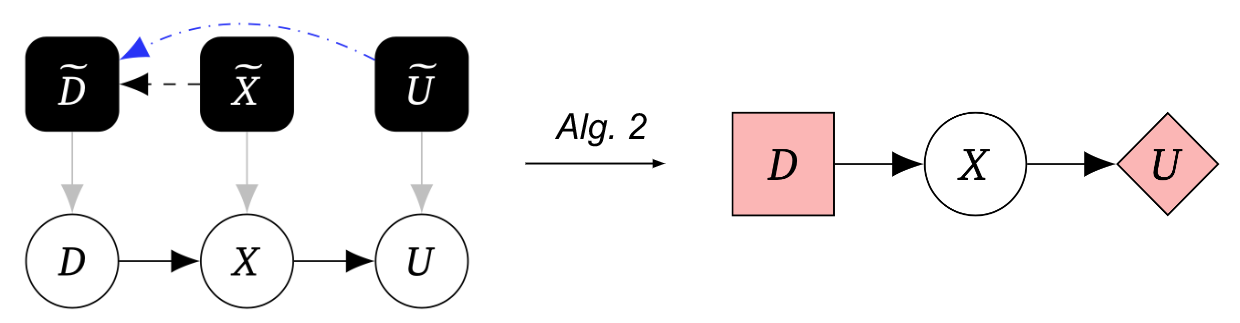

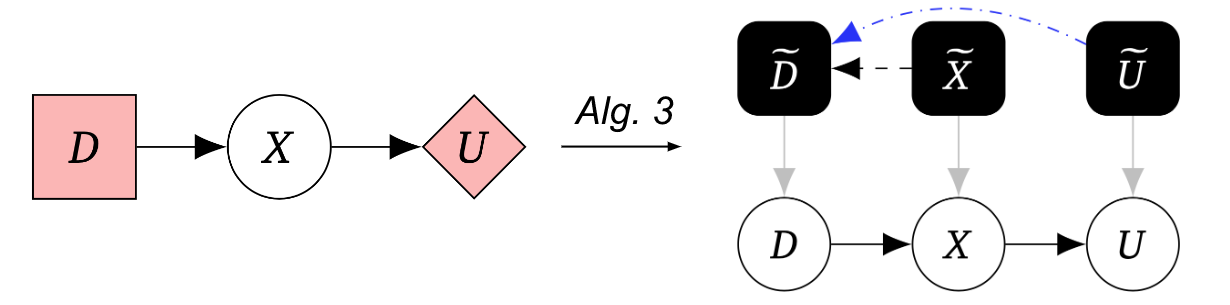

Our second algorithm transforms this mechanised causal graph to a recreation graph:

Taken collectively, Algorithm 1 adopted by Algorithm 2 permits us to find brokers from causal experiments, representing them utilizing CIDs.

Our third algorithm transforms the sport graph right into a mechanised causal graph, permitting us to translate between the sport and mechanised causal graph representations beneath some further assumptions:

Higher security instruments to mannequin AI brokers

We proposed the primary formal causal definition of brokers. Grounded in causal discovery, our key perception is that brokers are methods that adapt their behaviour in response to adjustments in how their actions affect the world. Certainly, our Algorithms 1 and a couple of describe a exact experimental course of that may assist assess whether or not a system incorporates an agent.

Curiosity in causal modelling of AI methods is quickly rising, and our analysis grounds this modelling in causal discovery experiments. Our paper demonstrates the potential of our strategy by enhancing the security evaluation of a number of instance AI methods and exhibits that causality is a helpful framework for locating whether or not there’s an agent in a system – a key concern for assessing dangers from AGI.

Excited to study extra? Take a look at our paper. Suggestions and feedback are most welcome.

Author:

Date: 2022-08-17 20:00:00