Microsoft on Monday mentioned it took steps to right a obvious safety gaffe that led to the publicity of 38 terabytes of personal knowledge.

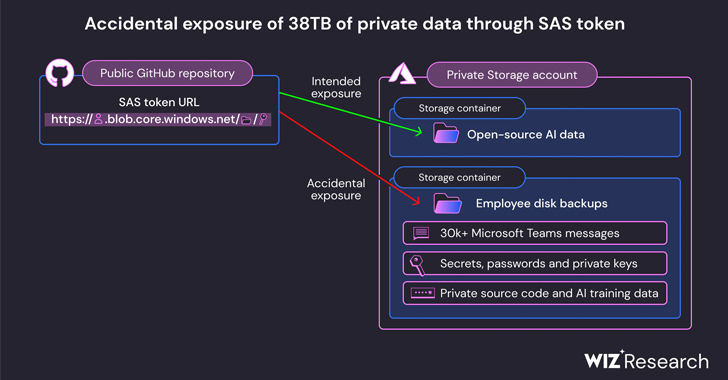

The leak was found on the corporate’s AI GitHub repository and is alleged to have been inadvertently made public when publishing a bucket of open-source coaching knowledge, Wiz mentioned. It additionally included a disk backup of two former workers’ workstations containing secrets and techniques, keys, passwords, and over 30,000 inside Groups messages.

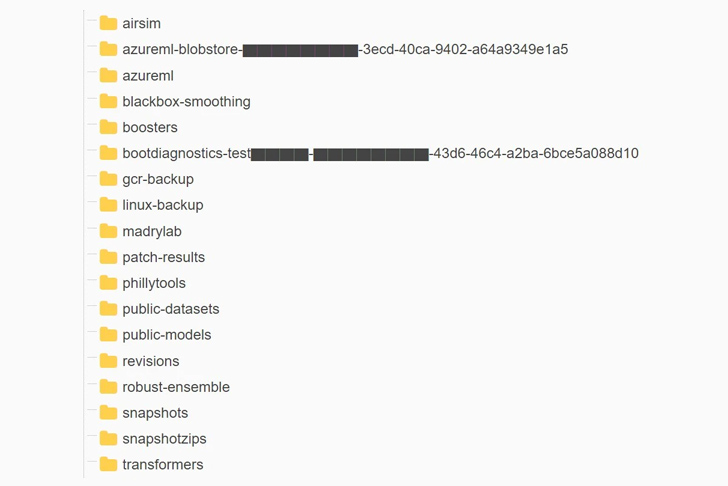

The repository, named “robust-models-transfer,” is not accessible. Previous to its takedown, it featured supply code and machine studying fashions pertaining to a 2020 research paper titled “Do Adversarially Robust ImageNet Models Transfer Better?”

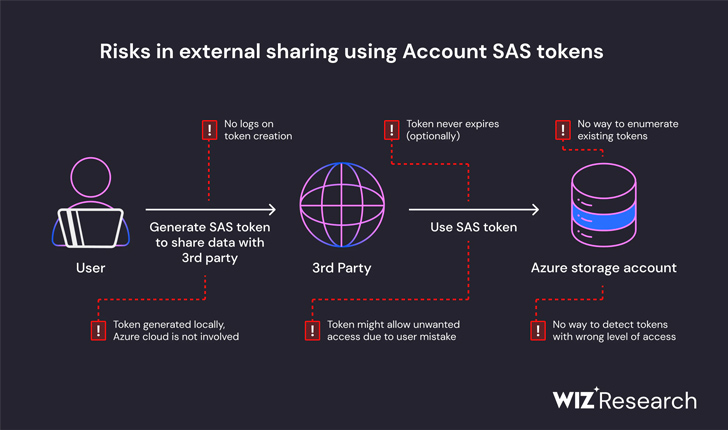

“The publicity got here as the results of a very permissive SAS token – an Azure characteristic that permits customers to share knowledge in a way that’s each laborious to trace and laborious to revoke,” Wiz said in a report. The difficulty was reported to Microsoft on June 22, 2023.

Particularly, the repository’s README.md file instructed builders to obtain the fashions from an Azure Storage URL that by accident additionally granted entry to your entire storage account, thereby exposing further personal knowledge.

“In addition to the overly permissive access scope, the token was also misconfigured to allow “full management” permissions instead of read-only,” Wiz researchers Hillai Ben-Sasson and Ronny Greenberg mentioned. “Meaning, not only could an attacker view all the files in the storage account, but they could delete and overwrite existing files as well.”

In response to the findings, Microsoft said its investigation discovered no proof of unauthorized publicity of buyer knowledge and that “no other internal services were put at risk because of this issue.” It additionally emphasised that clients needn’t take any motion on their half.

The Home windows makers additional famous that it revoked the SAS token and blocked all exterior entry to the storage account. The issue was resolved two days after accountable disclosure.

To mitigate such dangers going ahead, the corporate has expanded its secret scanning service to incorporate any SAS token that will have overly permissive expirations or privileges. It mentioned it additionally recognized a bug in its scanning system that flagged the particular SAS URL within the repository as a false constructive.

“Due to the lack of security and governance over Account SAS tokens, they should be considered as sensitive as the account key itself,” the researchers mentioned. “Therefore, it is highly recommended to avoid using Account SAS for external sharing. Token creation mistakes can easily go unnoticed and expose sensitive data.”

AI vs. AI: Harnessing AI Defenses Against AI-Powered Risks

Able to sort out new AI-driven cybersecurity challenges? Be a part of our insightful webinar with Zscaler to deal with the rising menace of generative AI in cybersecurity.

This isn’t the primary time misconfigured Azure storage accounts have come to gentle. In July 2022, JUMPSEC Labs highlighted a situation through which a menace actor might make the most of such accounts to realize entry to an enterprise on-premise surroundings.

The event is the most recent safety blunder at Microsoft and comes practically two weeks after the corporate revealed that hackers based mostly in China have been capable of infiltrate the corporate’s methods and steal a extremely delicate signing key by compromising an engineer’s company account and sure accessing a crash dump of the patron signing system.

“AI unlocks huge potential for tech companies. However, as data scientists and engineers race to bring new AI solutions to production, the massive amounts of data they handle require additional security checks and safeguards,” Wiz CTO and co-founder Ami Luttwak mentioned in a press release.

“This emerging technology requires large sets of data to train on. With many development teams needing to manipulate massive amounts of data, share it with their peers or collaborate on public open-source projects, cases like Microsoft’s are increasingly hard to monitor and avoid.”

Author: data@thehackernews.com (The Hacker Information)

Date: 2023-09-19 05:31:00