Grounding language to imaginative and prescient is a elementary drawback for a lot of real-world AI techniques akin to retrieving pictures or producing descriptions for the visually impaired. Success on these duties requires fashions to narrate totally different elements of language akin to objects and verbs to pictures. For instance, to tell apart between the 2 pictures within the center column under, fashions should differentiate between the verbs “catch” and “kick.” Verb understanding is especially tough because it requires not solely recognising objects, but additionally how totally different objects in a picture relate to one another. To beat this issue, we introduce the SVO-Probes dataset and use it to probe language and imaginative and prescient fashions for verb understanding.

Particularly, we contemplate multimodal transformer fashions (e.g., Lu et al., 2019; Chen et al., 2020; Tan and Bansal, 2019; Li et al., 2020), which have proven success on a wide range of language and imaginative and prescient duties. Nevertheless, regardless of sturdy efficiency on benchmarks, it’s not clear if these fashions have fine-grained multimodal understanding. Particularly, prior work exhibits that language and imaginative and prescient fashions can succeed at benchmarks with out multimodal understanding: for instance, answering questions on pictures based mostly solely on language priors (Agrawal et al., 2018) or “hallucinating” objects that aren’t within the picture when captioning pictures (Rohrbach et al., 2018). To anticipate mannequin limitations, work like Shekhar et al. suggest specialised evaluations to probe fashions systematically for language understanding. Nevertheless, prior probe units are restricted within the variety of objects and verbs. We developed SVO-Probes to raised consider potential limitations in verb understanding in present fashions.

SVO-Probes contains 48,000 image-sentence pairs and exams understanding for greater than 400 verbs. Every sentence could be damaged right into a

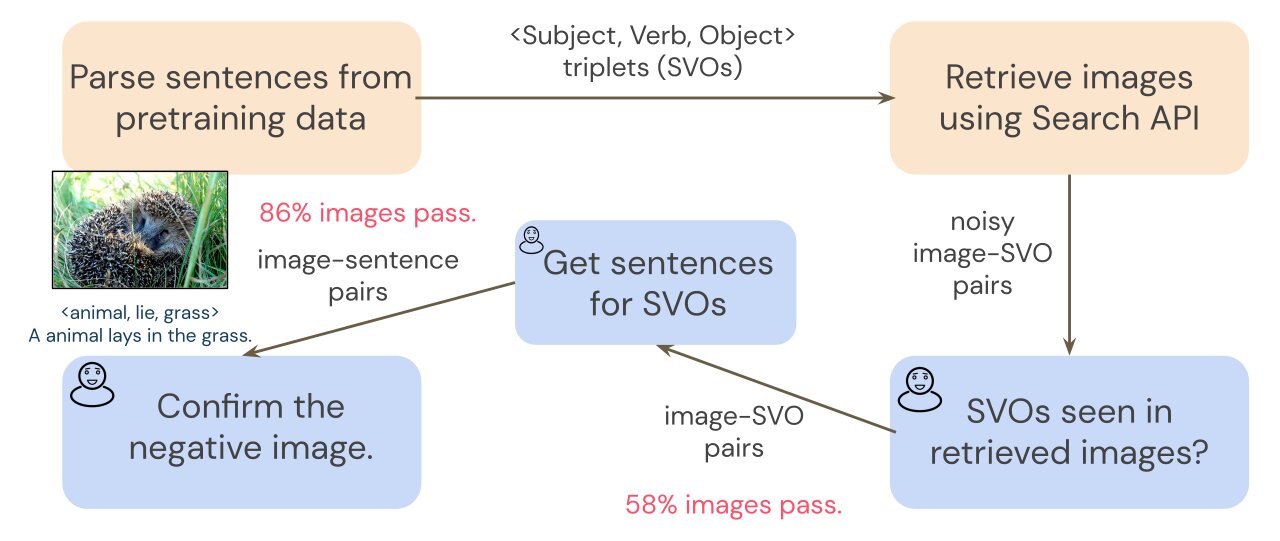

To create SVO-Probes, we query an image search with SVO triplets from a typical coaching dataset, Conceptual Captions (Sharma et al. 2018). As a result of picture search could be noisy, a preliminary annotation step filters the retrieved pictures to make sure we’ve a clear set of image-SVO pairs. Since transformers are skilled on image-sentence pairs, not image-SVO pairs, we’d like image-sentence pairs to probe our mannequin. To gather sentences which describe every picture, annotators write a brief sentence for every picture that features the SVO triplet. For instance, given the SVO triplet

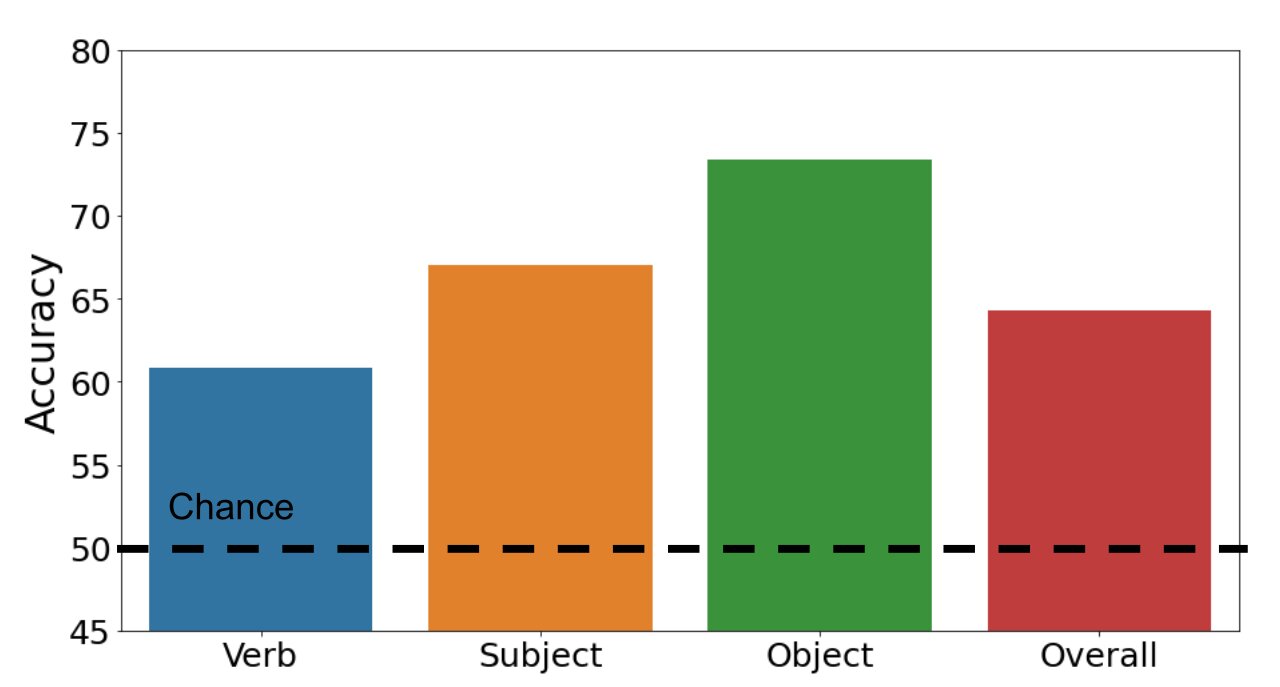

We look at whether or not multimodal transformers can precisely classify examples as constructive or adverse. The bar chart under illustrates our outcomes. Our dataset is difficult: our customary multimodal transformer mannequin achieves 64.3% accuracy general (likelihood is 50%). Whereas accuracy is 67.0% and 73.4% on topics and objects respectively, efficiency falls to 60.8% on verbs. This consequence exhibits that verb recognition is certainly difficult for imaginative and prescient and language fashions.

We additionally discover which mannequin architectures carry out finest on our dataset. Surprisingly, fashions with weaker picture modeling carry out higher than the usual transformer mannequin. One speculation is that our customary mannequin (with stronger picture modeling potential) overfits the prepare set. As each these fashions carry out worse on different language and imaginative and prescient duties, our focused probe process illuminates mannequin weaknesses that aren’t noticed on different benchmarks.

General, we discover that regardless of spectacular efficiency on benchmarks, multimodal transformers nonetheless battle with fine-grained understanding, particularly fine-grained verb understanding. We hope SVO-Probes will help drive exploration of verb understanding in language and imaginative and prescient fashions and encourage extra focused probe datasets.

Go to our SVO-Probes benchmark and models on GitHub: benchmark and fashions.

Author:

Date: 2022-02-22 19:00:00