A current DeepMind paper on the moral and social dangers of language fashions recognized massive language fashions leaking sensitive information about their coaching knowledge as a possible threat that organisations engaged on these fashions have the duty to deal with. One other recent paper reveals that related privateness dangers also can come up in normal picture classification fashions: a fingerprint of every particular person coaching picture may be discovered embedded within the mannequin parameters, and malicious events might exploit such fingerprints to reconstruct the coaching knowledge from the mannequin.

Privateness-enhancing applied sciences like differential privateness (DP) may be deployed at coaching time to mitigate these dangers, however they typically incur vital discount in mannequin efficiency. On this work, we make substantial progress in the direction of unlocking high-accuracy coaching of picture classification fashions below differential privateness.

Differential privateness was proposed as a mathematical framework to seize the requirement of defending particular person information in the midst of statistical knowledge evaluation (together with the coaching of machine studying fashions). DP algorithms defend people from any inferences in regards to the options that make them distinctive (together with full or partial reconstruction) by injecting fastidiously calibrated noise in the course of the computation of the specified statistic or mannequin. Utilizing DP algorithms offers strong and rigorous privateness ensures each in principle and in apply, and has turn into a de-facto gold normal adopted by plenty of public and private organisations.

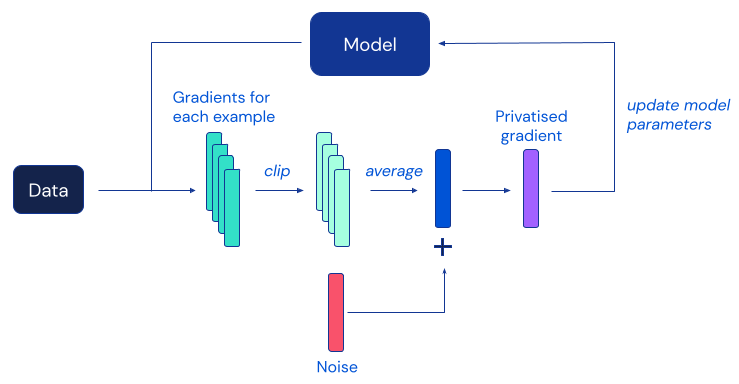

The most well-liked DP algorithm for deep studying is differentially non-public stochastic gradient descent (DP-SGD), a modification of ordinary SGD obtained by clipping gradients of particular person examples and including sufficient noise to masks the contribution of any particular person to every mannequin replace:

Sadly, prior works have discovered that in apply, the privateness safety offered by DP-SGD typically comes at the price of considerably much less correct fashions, which presents a significant impediment to the widespread adoption of differential privateness within the machine studying neighborhood. Based on empirical proof from prior works, this utility degradation in DP-SGD turns into extra extreme on bigger neural community fashions – together with those frequently used to attain the very best efficiency on difficult picture classification benchmarks.

Our work investigates this phenomenon and proposes a sequence of easy modifications to each the coaching process and mannequin structure, yielding a big enchancment on the accuracy of DP coaching on normal picture classification benchmarks. Essentially the most hanging commentary popping out of our analysis is that DP-SGD can be utilized to effectively prepare a lot deeper fashions than beforehand thought, so long as one ensures the mannequin’s gradients are well-behaved. We imagine the substantial soar in efficiency achieved by our analysis has the potential to unlock sensible functions of picture classification fashions skilled with formal privateness ensures.

The determine beneath summarises two of our foremost outcomes: an ~10% enchancment on CIFAR-10 in comparison with earlier work when privately coaching with out further knowledge, and a top-1 accuracy of 86.7% on ImageNet when privately fine-tuning a mannequin pre-trained on a special dataset, nearly closing the hole with the very best non-private efficiency.

These outcomes are achieved at 𝜺=8, a normal setting for calibrating the energy of the safety provided by differential privateness in machine studying functions. We discuss with the paper for a dialogue of this parameter, in addition to further experimental outcomes at different values of 𝜺 and likewise on different datasets. Along with the paper, we’re additionally open-sourcing our implementation to allow different researchers to confirm our findings and construct on them. We hope this contribution will assist others concerned with making sensible DP coaching a actuality.

Obtain our JAX implementation on GitHub.

Author:

Date: 2022-06-16 20:00:00